The robots.txt file is a handy tool for web designers to control search engine crawlers and other automated agents’ access to their site’s content. This file tells these agents which pages they should access and which ones they should ignore or avoid.

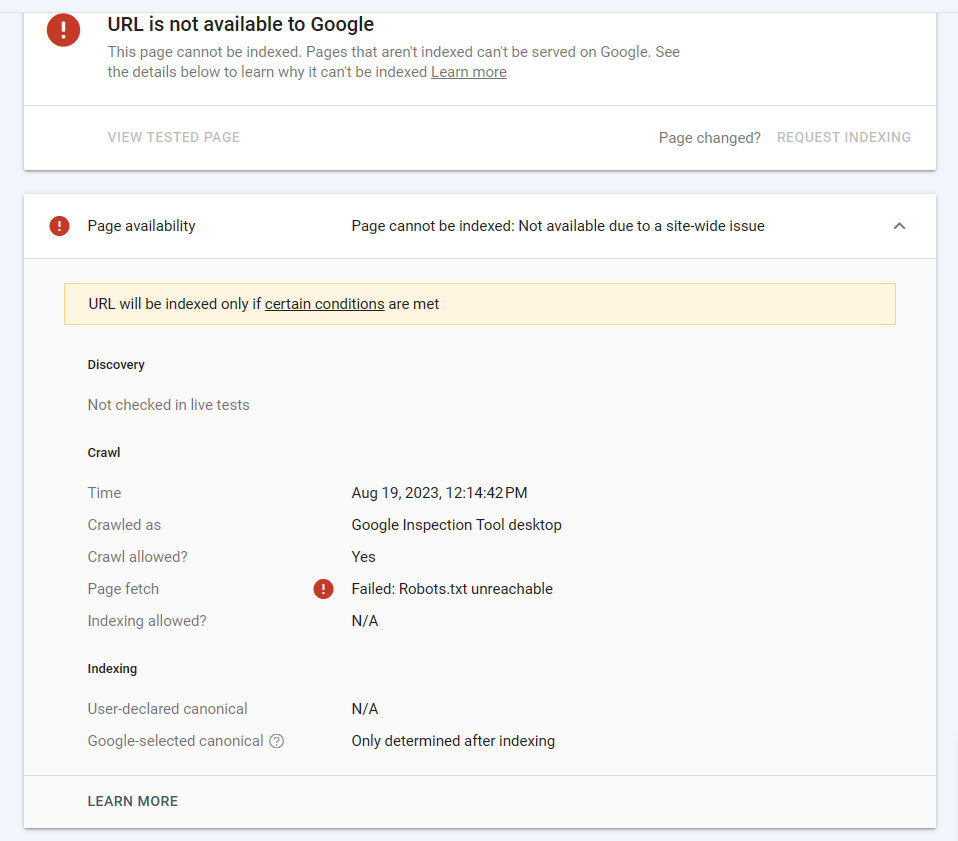

In some situations, you might encounter the error message “Robots.txt unreachable” when attempting to access the file. This error can prevent search engines and other automated tools from crawling and indexing their site, resulting in poor visibility and lower traffic. There are several possible reasons for this error, including incorrect permissions, outdated robots.txt file, issues with server configuration, or conflicts between the robots.txt and .htaccess rules.

To fix this issue, you should first ensure that your website robots.txt and .htaccess files are properly configured and updated. You should also check the server logs for any errors or warnings related to these files. In some cases, you may need to adjust your server settings or contact the hosting provider for assistance. Alternatively, you can use online tools like Screaming Frog or Google Search Console to troubleshoot and resolve these issues.

Solution #1 Using .htaccess

Put this syntax into .htaccess file and then Save.

Options +FollowSymlinks RewriteEngine on RewriteBase / # remove trailing slash RewriteRule ^(.*)\/(\?.*)?$ $1$2 [R=301,L] # Allow Robots.txt to pass through RewriteRule ^robots.txt - [L] RewriteRule ^([^/]*)$ index.php?page=$1 [NC]

Overall, the robots.txt and .htaccess files are essential tools for managing website access and security, but they require proper configuration and maintenance to avoid issues like “robots.txt unreachable”